These days, it’s hard to avoid AI. Is it the beginning of the end of civilization and culture? Or a route to relieving drudgery and improving human experience? Or is it just an empty marketing buzzword? And what does it have to do with fact checking anyway?

Honesty in public debate matters

You can help us take action – and get our regular free email

Types of Artificial Intelligence

Before we answer that last question, let’s unpack what we mean by AI, or artificial intelligence, as it’s a term whose meaning has changed over the years. For a long time, it was used almost synonymously with “machine learning”, which covers a wide range of algorithms that can learn from sets of example data. When Netflix recommends your next binge watch based on your and others viewing history, that’s machine learning. When your bank notices some unusual patterns of purchase and asks if your card has been stolen, that’s machine learning. And when a radiologist identifies potentially cancerous cells in a scan, they’re increasingly likely to be supported by machine learning.

In the last few years, one subset of AI has become increasingly popular, namely generative AI (or genAI). While ChatGPT led the way, it now shares the space with DeepSeek, Gemini, Grok and many other offerings. Some of these provide a user-friendly “chatbot” interface, while others can be embedded in other tools. But all have the purpose of generating content, whether that’s a written answer to a question, or creating an image or video or an entire blog post in response to the user’s prompt. (No AI was used in generating this article though!)

Conventional machine learning is useful for placing things into existing categories. One of the components we discuss below is a topic classifier, which identifies the topic (or topics) present in each news article we analyse. We chose a fixed list of 17 high-level topics, such as health, politics and education. We used a set of 10,000 articles that had been labelled with their topics to train a model, and this can now be used to add equivalent labels to new articles. This way, we get consistent and predictable labels which we use to filter and select articles.

GenAI models are trained on billions of sentences but without fixed labels like topics.

Instead, these models have a very good idea of how words are put together to form sentences, and how sentences are typically sequenced, but no deeper understanding of their meaning. So we find it to be effective at answering questions like “Who is claiming what in this article?” and “Do these two claims mean essentially the same?”, because the answer is contained in the text itself. We never ask it “Is this claim true?” because no model, generative or conventional, can reliably answer that as it depends on real-world knowledge and reasoning.

Fact checking with AI

At Full Fact, we’ve been developing AI tools for many years to support our fact checkers. Let’s consider what fact checkers actually do and how do our tools help. Briefly, fact checkers identify public claims that are potentially harmful and misleading; investigate them; and write articles to put those claims into a clearer context. Along the way, they often contact people making these claims to ask them to explain or correct themselves or to stop repeating misleading or unsupported claims. By doing this, we hope to both increase the good information available to the public and to reduce the amount of bad information.

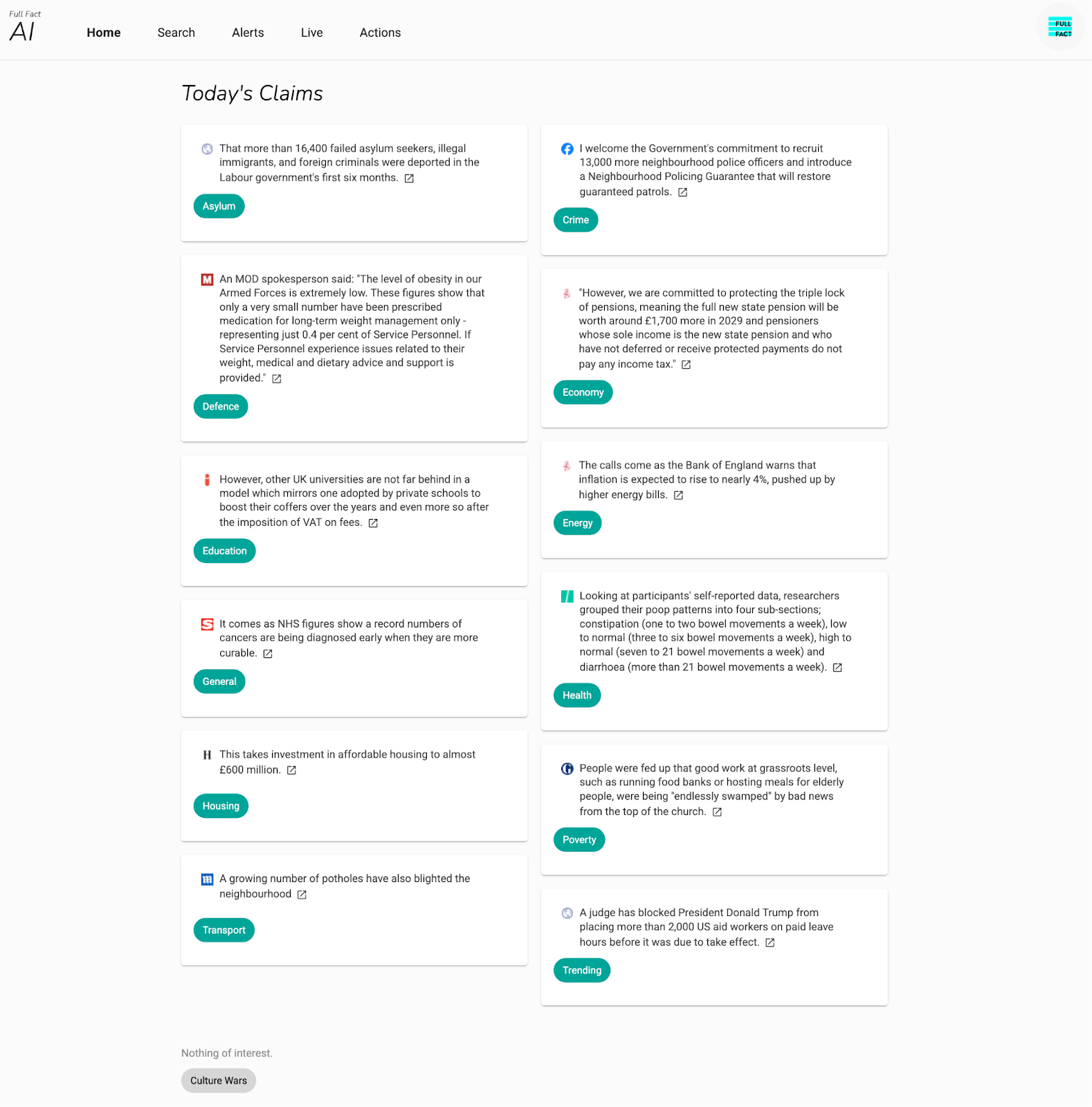

Because people can make misleading or harmful claims anywhere, fact checkers have to look everywhere to find them. Our media monitoring tools constantly review stories from daily newspapers, TV, radio, online videos, social media platforms and Hansard. Each article is tagged with its topic (as detected by one ML-AI tool) then each claim within the article is labelled to show what class of claim it is and who is making the claim (using more AI models). At this point, each claim is also scored for “checkworthiness”: how likely is it that this claim is worth the fact checker’s time to investigate further? With this information, we can then display the most-checkworthy claims under a series of topic headings each morning.

For example, when a study into prostate cancer screening was misreported, our checkworthy tool brought it to the attention of a fact checker who contacted the study’s author; the author corrected their report and contacted a newspaper which also issued a correction in their coverage. Alongside more conventional media monitoring, this tool can guide fact checkers to the most important claims from a far wider range of media than any human team could otherwise keep tabs on.

We have started experimenting with using genAI to help identify checkworthy claims, especially identifying health-related claims in videos. Our motivation is that genAI models are some of the most powerful large language models available and are very good at answering generic questions about even quite long documents (such as the transcript of a full-length video). Our tool is designed to find claims worth checking (not to decide if a claim is true or false), so we can ask it to find snippets that are making specific claims, or giving specific advice, or using emotive language, all within a broad category of health. By ranking the resulting snippets, we can guide our users to the specific parts of a video most likely to be of interest. The fact checkers can then watch the relevant parts of the video to make sure they understand the content of claims, before deciding whether to check them formally. For example, when a popular two-hour long podcast shared serious vaccine misinformation, our tool was able to identify the relevant section, saving our fact checkers a lot of time.

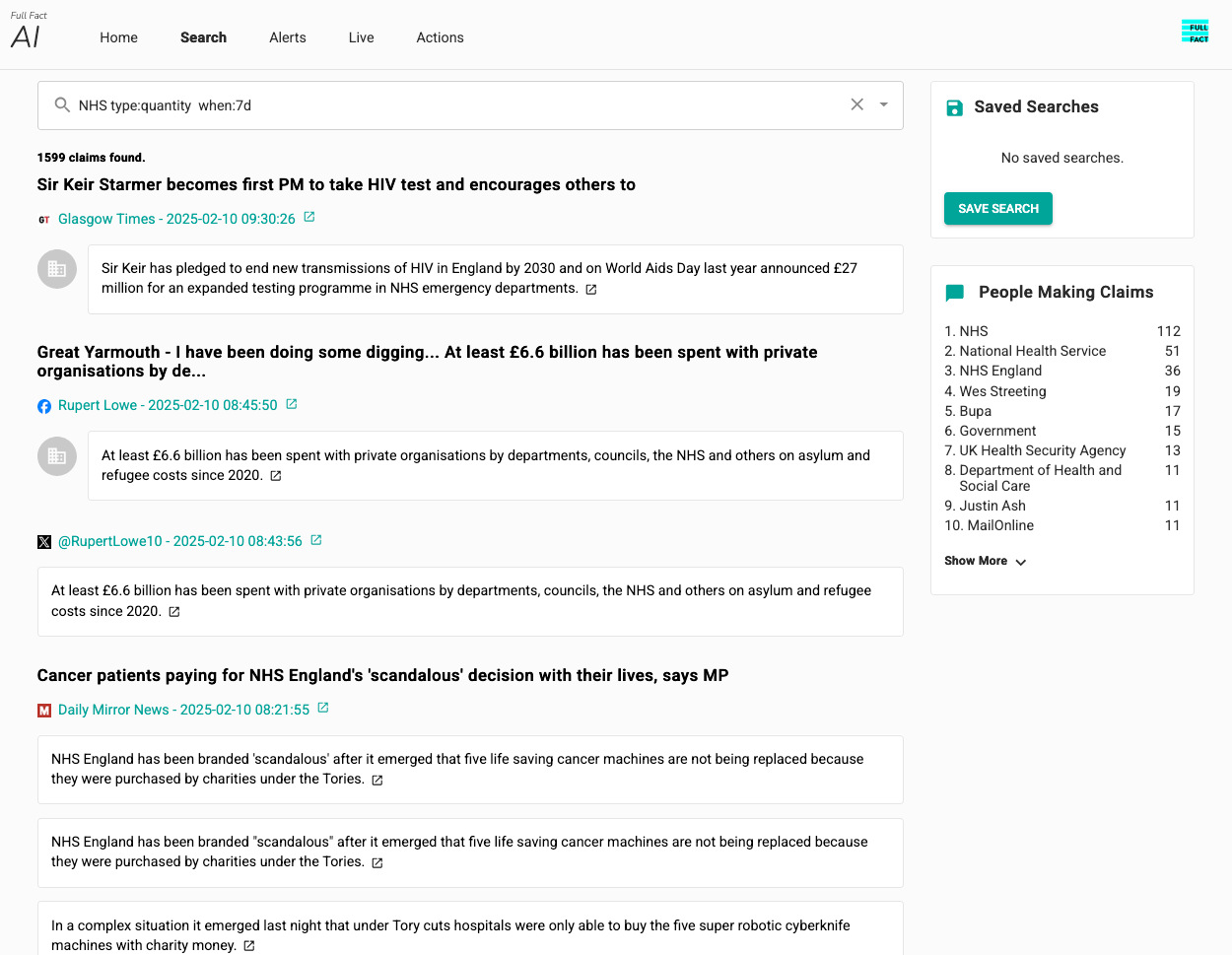

This checkworthy tool is designed to highlight some example claims and provides a useful overview of the day’s media. But often fact checkers will want to dive deeper into a topic, and for that we provide a tool that allows them to search all the media we monitor. Here, users can search by keywords or phrases and can apply various filters to see the range of claims that some individual has made recently, or see the range of people discussing a particular topic. They can choose to focus on social media posts or news reports or parliamentary reports, or to see comments made by one political party or another. This can be useful to find articles that provide the background context of a claim that may otherwise be harder to interpret.

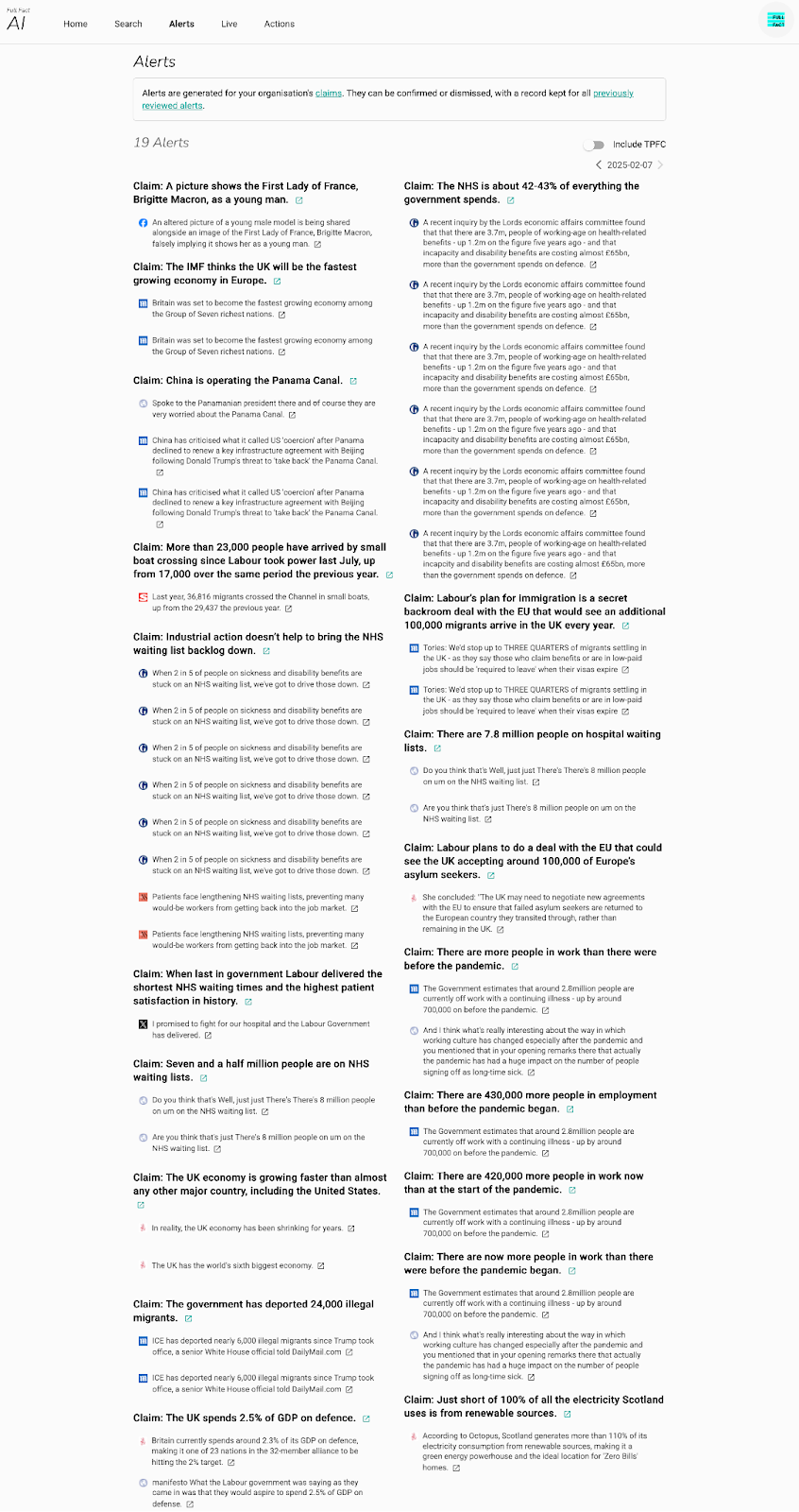

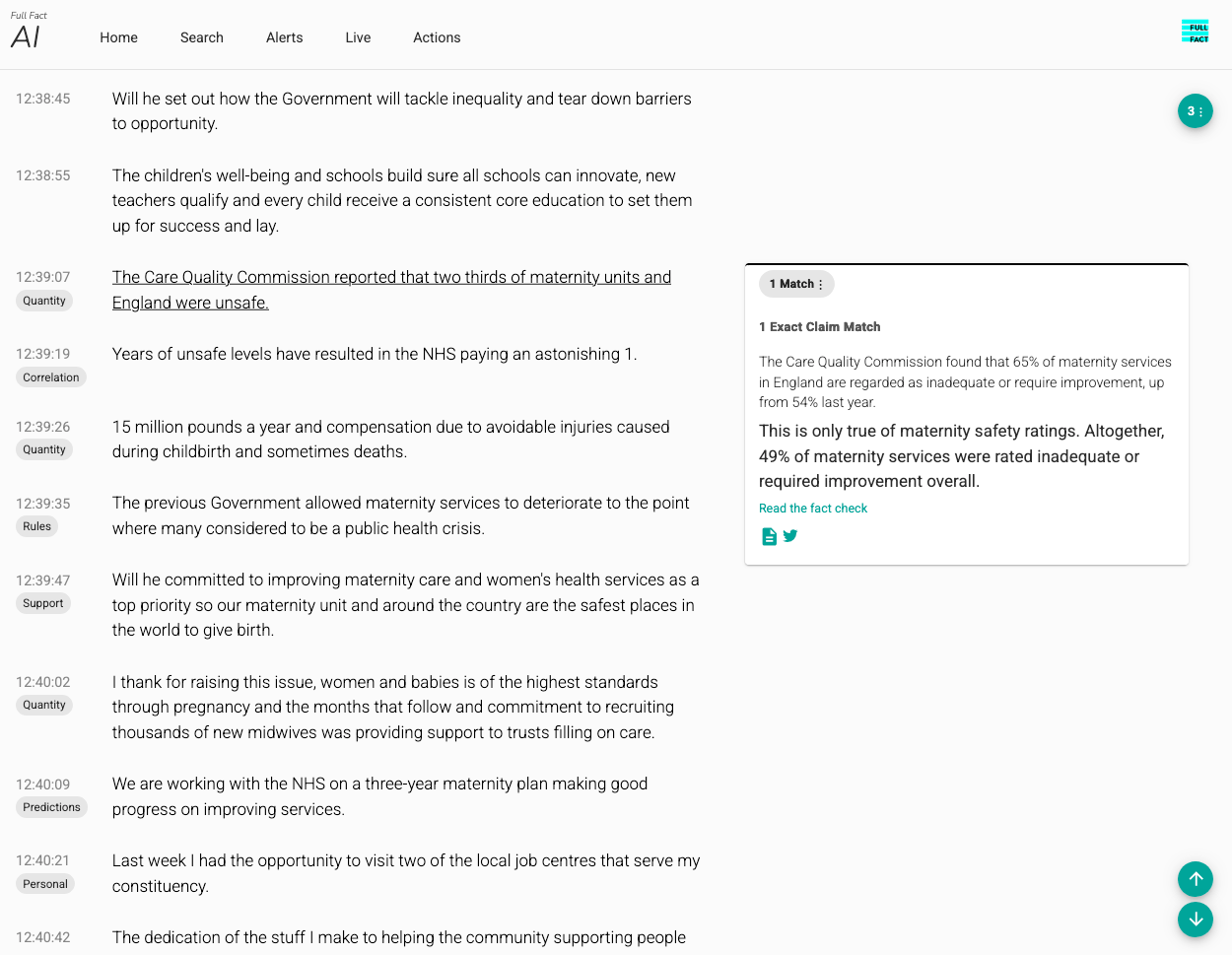

When a claim is found that is misleading and potentially harmful, fact checkers can research, write and publish an article putting the claim into its proper context for the benefit of our readers. But even after this, misleading claims are often repeated. For this reason, we developed a “claim matching” tool that compares all the media we monitor with all the claims that we recently checked. The resulting alerts allows us to (for example) contact an MP who keeps repeating out of date information or to ask a newspaper to publish a correction – as we did when a number of MPs were repeating the same misleading claim about migrant numbers. This is especially important when the tool highlights important trends, such as a particular pattern of misinformation or a repeated misleading claim.

Our claim matching tool starts by using a pre-existing large language model to convert claims and media sentence to vectors, which can then be compared directly. The more parallel the vectors are, the more semantically similar the sentences are, in theory. However, we found that this often confused different people or places, so we enhanced it with an entity recognition component and a part of speech tagger. We then trained a conventional neural network model to predict the likelihood of a match and produced a far more accurate tool.

While news can break at any time, many important events follow a predictable schedule, such as the weekly PMQs in Westminster or televised debates during an election campaign. During these events, our tools can be used to generate a transcript in real time with all the features discussed above. Simply having a text representation of spoken words makes it much easier for fact checkers to keep track of what is being said and to share that with colleagues. This means that if a misleading claim is repeated by a politician live on air for example, our fact checkers can be immediately alerted to it and can take action. These tools can also be used to analyse long videos or podcasts to help fact checkers zoom in and check any potentially problematic portions without having to spend too much time watching or listening to regular content.

Having found a claim worth checking, the fact checker will then carefully review the claim, research background information, find the source of statistical claims and (where appropriate) give a right-of-reply to the person or organisation making the claim. Then they write the full article, putting the claim into context so that anyone interested can educate themselves about the matter and make up their own mind. During this process, fact checkers may use AI-powered tools for reverse image search or conventional search engines. But the actual writing of the articles is done by the fact checkers themselves, followed by careful reviewing and editing by colleagues.

After developed these tools at Full Fact over a number of years, we’ve been keen to share their value more widely. Our tools are now used by over 40 fact checking organisations working in three languages across 30 countries on a daily basis, to help experts use their knowledge of national and regional affairs and issues of importance more effectively. Through 2024, the tools were used to support fact checkers monitoring 12 national elections. On a typical weekday, our tools process about a third of a million sentences in total.

When we introduced our tools to 25 Arab-speaking fact checking organisations, they reported that media monitoring had become faster and simpler and many started live-monitoring of events for the first time. Over 200 fact check articles have been published about claims found there using our tools.

At Full Fact, we include a wide variety of technologies in our tools while being careful to use them appropriately. Our AI software can help with the overall fact checking process, allowing us to check more claims and provide a greater amount of reliable information than we could without it. We will always keep human experts in the loop, partly to minimise the risks around bias and hallucinations that come with fully-automated systems but mostly because even the most advanced AI does not come close to human reasoning.